Computer Architecture Definition

Computer Architecture Definition

When you define computer architecture, it helps to understand what is meant by architecture of computer networks. It seems complicated, but its definition is easier than it sounds.

Computer architecture is a science. It’s a set of rules that states how computer software and hardware join together and interact to make a computer work. This science determines how a computer works. So, how do you explain computer architecture? It is the rules that a computer system performs and operates.

What is Computer Architecture?

Computer architecture is defined as a specification that describes how computer hardware and computer software technologies interact to create a computer platform or system. When you think of the word architecture, you think of building a house or structure. With that same principle in mind, architecture in computers involves building a computer and all that goes into a computer system.

There are three main categories of this type of architecture.

- System design – Includes all hardware parts, such as CPU, data processors, multiprocessors, memory controllers, and direct memory access. This is the actual computer system.

- Instruction set architecture – Includes a CPU’s functions and capabilities, programming languages, data formats, processor register types, and instructions used by computer programmers. This is the software that makes it run. Think of Windows or similar programs.

- Microarchitecture – This defines the data processing and storage element or data paths. These include storage devices and related computer organization.

The structure of computer architecture

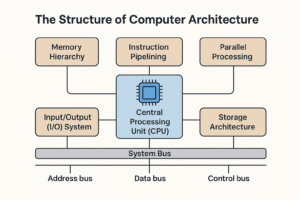

While computer architectures can differ greatly depending on the purpose of the computer, several key components generally contribute to

the structure of computer architecture:

- Central Processing Unit (CPU) – Often referred to as the “brain” of the computer, the CPU executes instructions, performs calculations, and manages data. Its architecture dictates factors such as instruction set, clock speed, and cache hierarchy, all of which significantly impact overall system performance.

- Memory Hierarchy – This includes various types of memory, such as cache memory, random access memory (RAM), and storage devices. The memory hierarchy plays a crucial role in optimizing data access times, as data moves between different levels of memory based on their proximity to the CPU and the frequency of access.

- Input/Output (I/O) System – The I/O system enables communication between the computer and external devices, such as keyboards, monitors, and storage devices. It involves designing efficient data transfer mechanisms to ensure smooth interaction and data exchange.

- Storage Architecture – This deals with how data is stored and retrieved from storage devices like hard drives, solid-state drives (SSDs), and optical drives. Efficient storage architectures ensure data integrity, availability, and fast access times.

- Instruction Pipelining – Modern CPUs employ pipelining, a technique that breaks down instruction execution into multiple stages. This allows the CPU to process multiple instructions simultaneously, resulting in improved throughput.

- Parallel Processing – This involves dividing a task into smaller subtasks and executing them concurrently, often on multiple cores or processors. Parallel processing significantly accelerates computations, making it key to tasks like simulations, video rendering, and machine learning.

All of the above parts are connected through a system bus consisting of the address bus, data bus and control bus. The diagram below is an example of this structure: